Text Processing¶

Topics¶

- Overview

- Parsing, Stemming, Lemmatization

- Named Entity Recognition

- Stop Words

- Frequency Analysis

- Document Summarization

Toolkits¶

NLTK: NLP toolkit¶

Book: http://www.nltk.org/book/

Wiki: https://github.com/nltk/nltk/wiki

Corpus: http://www.nltk.org/nltk_data/

spaCy: another NLP toolkit¶

Simpler to use than NLTK (but usually fewer knobs)

Models: https://spacy.io/usage/models

Tutorial: https://spacy.io/usage/spacy-101

Setup¶

Run this command from an Anaconda prompt (within the mldds03 environment):

(mldds03) conda install nltk spacy scikit-learn pandasWhat is Text Processing?¶

- A sub-field of Natural Language Processing (NLP)

- Natural Language Processing is ...

- Teaching machines to understand and produce language (text, speech)

- A combination of computer science and computational linguistics

Text Processing Tasks¶

- Word categorization and tagging: part of speech, type of entity

- Semantic Analysis: finding meanings of documents

- Topic Modeling: finding topics from documents

- Document similarity: comparing if two documents are semantically similar

- etc.

Note: Speech is text processing + acoustic model

Parsing, Stemming & Lemmatization¶

- Tokenization: splitting text into words

- Sentence boundary detection: splitting text into sentences

- Stemming: finding word stems

- stating => state, reference => refer

- Lemmatization: finding the base form of words

- was => be

Tokenization¶

- Segmenting text into words, punctuation, etc.

- Rule-based

# Download the English model

# You can find other models here: https://spacy.io/models/en

!python -m spacy download en_core_web_sm

text = u"This is a test. A quick brown fox jumps over the lazy dog."

import spacy

nlp = spacy.load('en_core_web_sm')

doc = nlp(text)

# sentence tokenizer

for sent in doc.sents:

print()

print(sent)

# word tokenizer

for token in doc:

print(token.text)

spacy.explain('DET')

from spacy import displacy

nlp = spacy.load('en_core_web_sm')

doc = nlp(text)

displacy.render(doc, style='dep', jupyter=True, options={'distance': 140})

Tokenization with NLTK¶

http://www.nltk.org/api/nltk.tokenize.html

nltk.tokenize

- sent_tokenize

- word_tokenize

- wordpunc_tokenize

# Download the Punkt sentence tokenizer

# https://www.nltk.org/_modules/nltk/tokenize/punkt.html

# List of available corpora: http://www.nltk.org/book/ch02.html#tab-corpora

import nltk

nltk.download('punkt')

from nltk.tokenize import sent_tokenize

# list of sentences

sent_tokenize(text)

from nltk.tokenize import word_tokenize

# flat list of words and punctuations

word_tokenize(text)

from nltk.tokenize import sent_tokenize, word_tokenize

sentences = sent_tokenize(text)

# list of lists

[word_tokenize(sentence) for sentence in sentences]

from nltk.tokenize import wordpunct_tokenize

text2 = "'The time is now 5.30am,' he said."

print(word_tokenize(text2))

print(wordpunct_tokenize(text2))

# Part of speech tagging

import nltk

nltk.download('averaged_perceptron_tagger')

from nltk.tokenize import sent_tokenize, word_tokenize

sentences = sent_tokenize(text)

sentences = [word_tokenize(sentence) for sentence in sentences]

[nltk.pos_tag(word) for word in sentences]

spacy.explain('JJ')

Twitter-aware tokenizer¶

nltk.tokenize.TweetTokenizer

http://www.nltk.org/api/nltk.tokenize.html#module-nltk.tokenize.casual

from nltk.tokenize import TweetTokenizer

tknzr = TweetTokenizer()

tweet = "This is a cooool #dummysmiley: :-) :-P <3 and some arrows < > -> <--"

tknzr.tokenize(tweet)

tknzr = TweetTokenizer(strip_handles=True, reduce_len=True)

tweet = '@remy: This is waaaaayyyy too much for you!!!!!!'

tknzr.tokenize(tweet)

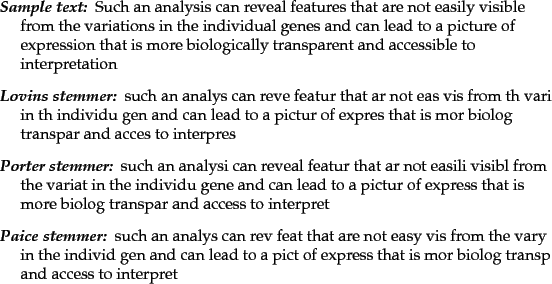

Stemming vs. Lemmatization¶

- Stemming uses rule-based heuristics

- ponies => poni

- Quicker, but less precision

- Lemmatization uses vocabulary and morphological analysis

- ponies => pony

- For English, not much improvement over stemming because context of word use is more important

https://nlp.stanford.edu/IR-book/html/htmledition/stemming-and-lemmatization-1.html

Porter Stemmer¶

- 5 sequential phases of word reductions

- Applies rules such as "sses -> ss", "ies => i"

(image: https://nlp.stanford.edu/IR-book/html/htmledition/stemming-and-lemmatization-1.html)

from spacy.lemmatizer import Lemmatizer

from spacy.lang.en import LEMMA_INDEX, LEMMA_EXC, LEMMA_RULES

nlp = spacy.load('en_core_web_sm')

lemmatizer = Lemmatizer(LEMMA_INDEX, LEMMA_EXC, LEMMA_RULES)

doc = nlp(text)

for token in doc:

print(lemmatizer(token.text, token.pos_))

from nltk.stem import PorterStemmer

from nltk.tokenize import word_tokenize

stemmer = PorterStemmer()

tokens = word_tokenize(text)

for token in tokens:

print(stemmer.stem(token))

import nltk

nltk.download('wordnet')

from nltk.stem import WordNetLemmatizer

from nltk.tokenize import word_tokenize

lemmatizer = WordNetLemmatizer()

tokens = word_tokenize(text)

for token in tokens:

print(lemmatizer.lemmatize(token))

Named Entity Recognition¶

Find and classify entities within text

- Persons

- Organizations

- Locations

- Time expressions

- Quantities

- Phone numbers

- etc

Grammar-based models, trained classifiers

Can be corpus-dependent, see https://spacy.io/api/annotation#named-entities

Named Entity Recognition with spaCy¶

nlp = spacy.load('en_core_web_sm')

text3 = u"Flight 224 is scheduled to arrive in Frankfurt at 4pm July 5th, 2018."

doc = nlp(text3)

for entity in doc.ents:

print(entity.text, entity.label_, entity.start_char, entity.end_char)

spacy.explain('NORP')

from spacy import displacy

displacy.render(doc, style='ent', jupyter=True)

import nltk

nltk.download('maxent_ne_chunker')

nltk.download('words')

from nltk.tokenize import sent_tokenize, word_tokenize

sentences = sent_tokenize(text3)

sentences = [word_tokenize(sentence) for sentence in sentences]

# Input to ne_chunk needs to be a part-of-speech tagged word

sentences_pos_tagged = [nltk.pos_tag(word) for word in sentences]

[nltk.ne_chunk(word_pos) for word_pos in sentences_pos_tagged]

Stop words¶

Stop words are high-frequency words that don't contribute much lexical content:

- the

- a

- to

NLP libraries usually include a corpus of stop words.

Stop word lists:

from spacy.lang.en.stop_words import STOP_WORDS

STOP_WORDS

# Deutsch

from spacy.lang.de.stop_words import STOP_WORDS

STOP_WORDS

doc = nlp(text3)

for token in doc:

print(token.text, token.is_stop)

# Adding stop words

from spacy.lang.en.stop_words import STOP_WORDS

STOP_WORDS.add('MLDDS')

doc = nlp(u"Sorry I'm not free tonite, I have MLDDS (lowercase: mldds).")

for token in doc:

print(token.text, token.is_stop)

Stop words with NLTK¶

nltk.corpus.stopwords# Download corpus

import nltk

nltk.download('stopwords')

from nltk.corpus import stopwords

stopwords.words('english')

stopwords.words('german')

tokens = nltk.word_tokenize(text3)

stops = set(stopwords.words('english'))

for token in tokens:

print(token, token in stops)

# Adding stop words

stops = stopwords.words('english')

stops.append("MLDDS")

stops = set(stops)

tokens = nltk.word_tokenize(u"Sorry I'm not free tonite, I have MLDDS (lowercase: mldds).")

for token in tokens:

print(token, token in stops)

Frequency Analysis¶

Answers two questions:

How often does a word appear in a document?

How important is a word in a document?

Measure: Term Frequency - Inverse Document Frequency (TF-IDF)

Term Frequency¶

Most common formula:

$$\frac{f_{t, d}}{\sum_{t' \in d} \, f_{t',d}}$$

$f_{t, d}$: count of term $t$ in document $d$

Inverse Document Frequency¶

Most common formula:

$$log\frac{N}{\mid\{d \in D : t \in d \}\mid}$$

$N$: number of documents

$\mid\{d \in D : t \in d \}\mid$: number of documents containing term $t$

TD-IDF¶

$$tfidf(t, d, D) = tf(t, d) * idf(t, D)$$

| term | tf | idf | tf-idf |

|---|---|---|---|

| to | large | very small | closer to 0 |

| coffee | small | large | not closer to 0 |

text5 = u"This is a test.\n" \

u"The quick brown fox jumps over the lazy dog.\n" \

u"The early bird gets the worm.\n"

Computing Word Counts¶

# http://scikit-learn.org/stable/modules/feature_extraction.html

from sklearn.feature_extraction.text import CountVectorizer

nlp = spacy.load('en_core_web_sm')

doc = nlp(text5)

sentences = [sent.text for sent in doc.sents]

# Count word occurrences

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(sentences)

# convert sparse matrix to dense matrix

X_dense = X.todense()

X_dense

vectorizer.get_feature_names()

# display as a dataframe

import pandas as pd

df_wc = pd.DataFrame(X_dense, columns=vectorizer.get_feature_names())

df_wc

Computing TF-IDF¶

# http://scikit-learn.org/stable/modules/feature_extraction.html

from sklearn.feature_extraction.text import TfidfVectorizer

# TfidfVectorizer is a combination of

# CountVectorizer + TfidfTransformer

vectorizer = TfidfVectorizer()

X = vectorizer.fit_transform(sentences)

# convert sparse matrix to dense matrix

X_dense = X.todense()

print(X_dense.shape)

print(vectorizer.get_feature_names())

X_dense

# for each sentence, get the highest tf-idf

import numpy as np

terms = vectorizer.get_feature_names()

tfidf_arr = np.array(X_dense)

for i in np.arange(len(sentences)):

print(sentences[i])

sorted_idx = np.argsort(tfidf_arr[i])[::-1]

[print(terms[j], tfidf_arr[i][j]) for j in sorted_idx]

print()

Exercise¶

- Get 3-5 of your own sample sentences

- Compute the TF-IDF

- Compute the TF-IDF with stop_words filtered out:

from spacy.lang.en.stop_words import STOP_WORDS

vectorizer = TfidfVectorizer(stop_words=STOP_WORDS)

...N-grams¶

TF-IDF can be applied to N-grams (N words at a time), to try to capture some context information.

CountVectorizer(ngram_range=(minN, maxN)), ..)

TfidfVectorizer(ngram_range=(minN, maxN)), ..)text5 = u"This is a test.\n" \

u"The quick brown fox jumps over the lazy dog.\n" \

u"The early bird gets the worm.\n"

from sklearn.feature_extraction.text import CountVectorizer

import pandas as pd

nlp = spacy.load('en_core_web_sm')

doc = nlp(text5)

sentences = [sent.text for sent in doc.sents]

# Count word occurrences using 1 and 2-grams

vectorizer = CountVectorizer(ngram_range=(1, 2))

X = vectorizer.fit_transform(sentences)

# convert sparse matrix to dense matrix

X_dense = X.todense()

print(X_dense.shape)

pd.DataFrame(X_dense, columns=vectorizer.get_feature_names())

Exercise: TF-IDF with Trigrams¶

- Compute the TF-IDF for trigrams (1 to 3-grams), using your sample text.

- Try with and without stop words included

# Your code here

NLTK N-gram support¶

You can also split text into trigrams and bigrams using NLTK.

from nltk import bigrams, trigrams, ngrams, word_tokenize

# http://www.taleswithmorals.com/aesop-fable-the-ant-and-the-grasshopper.htm

text6 = "In a field one summer's day a Grasshopper was hopping about, " \

"chirping and singing to its heart's content."

words = word_tokenize(text6)

print(list(bigrams(words)))

print(list(trigrams(words)))

print(list(ngrams(words, 4)))

Workshop: Paragraph Summarization¶

- Download a corpus from NLTK

- Split the corpus into paragraphs

- Compute TF-IDF score for each word in a paragraph corresponding to its level of "importance"

- Rank each sentence using (sum of TF-IDF(words) / number of tokens)

- Extract the top N highest scoring sentences and return them as our "summary"

Credits: https://github.com/charlieg/A-Smattering-of-NLP-in-Python

Download a corpus¶

Select a corpus from http://www.nltk.org/nltk_data/.

Suggestions:

- reuters

- abc

- gutenberg

Example

# download the corpus you selected

import nltk

nltk.download('abc')

# update the import with the corpus you selected

from nltk.corpus import abc as corpus# Your code here

Explore the corpus¶

For example, try printing the raw text of one of the files:

fileids = corpus.fileids()

print(fileids)

print(corpus.raw(fileids[0])# Your code here

Split the text into paragraphs¶

NLTK doesn't include a paragraph tokenizer, so we'll try to create our own.

One logic that may work is this:

- a paragraph is detected if there are consecutive newline characters

Adapt this function to your corpus, and adjust the logic if necessary to get paragraphs.

def tokenize_paragraph(text):

"""Tokenizes text into paragraphs

Args:

text - the raw text

Returns:

A list of paragraphs for the raw text

"""

# Note: you may need to customize this logic for the

# corpus you selected

return [p for p in text.split('\n\n') if p]

# test code

paragraphs = tokenize_paragraph(corpus.raw(fileids[0]))

print(paragraphs[:5])# Your code here

Collect all paragraphs in your corpus¶

Using the paragraph tokenizer, create a list containing all paragraphs for all the files in the corpus.

We will be using this to train TF-IDF.

Some starter code:

all_paragraphs = []

for fileid in corpus.fileids():

text = corpus.raw(fileid)

# Split text into paragraphs and add to all_paragraphs

# You can use the syntax list1 = list1 + list2

#

# Your code here

...

# test code

print(len(all_paragraphs))

print(all_paragraphs[:5])# Your code here

def tokenize_and_stem(text):

"""Helper function to tokenize and stem words in text

Arg:

text: the input text

Return:

the tokenized stem words

"""

import re

from nltk.stem import PorterStemmer

stemmer = PorterStemmer()

tokens = [word for sent in nltk.sent_tokenize(text) for word in nltk.word_tokenize(sent)]

filtered_tokens = []

# filter out any tokens not containing letters (e.g., numeric tokens, raw punctuation)

for token in tokens:

if re.search('[a-zA-Z]', token):

filtered_tokens.append(token)

stems = [stemmer.stem(t) for t in filtered_tokens]

return stems

Compute TF-IDF¶

- Treating a paragraph as a document, compute the TF-IDF using TfidfVectorizer

- Pass

tokenize_and_stemas a tokenizer to TfidfVectorizer - Filter out stop words in TfidfVectorizer

fit_transformthe TfidfVectorizer (this may take about a minute or two)

from sklearn.feature_extraction.text import TfidfVectorizer

vectorizer = TfidfVectorizer(tokenizer=tokenize_and_stem, stop_words='english')

...# Your code here

Explore the TF-IDF matrix¶

Explore the TF-IDF matrix, counting the terms, documents, and printing the first few terms

feature_names = vectorizer.get_feature_names()

# number of terms

print("Number of terms:", len(feature_names))

# number of documents (paragraphs)

print("Number of paragraphs:", tfidf.shape[0])

# first 20 terms

print(feature_names[:20])# Your code here

Paragraph Summarization¶

- Pick a random paragraph

- Tokenize the paragraph into sentences

- Rank each sentence by getting the average word score for it

- Extract the top N highest scoring sentences and return them as our "summary"

import random

# Get a random index for all_paragraphs

paragraph_index = random.randint(0, len(all_paragraphs)-1)

paragraph = all_paragraphs[paragraph_index]

# Tokenize the selected paragraph into sentences

# for each sentence, compute the sum of TF-IDF divided by tokens

sentence_scores = []

for sentence in sent_tokenize(paragraph):

tfidf_sum = 0

sent_tokens = tokenize_and_stem(sentence)

feature_tokens = [t for t in sent_tokens if t in feature_names]

for ft in feature_tokens:

tfidf_sum += tfidf[paragraph_index, feature_names.index(ft)]

sentence_score = tfidf_sum / len(sent_tokens)

sentence_scores.append((sentence_score, sentence))

# Get the top-N scores and create the summary

# in case paragraph has less than 2 sentences

n = min(2, len(sentence_scores))

# sort by sentence_score

sentence_scores.sort(key=lambda x: x[0], reverse=True)

print('*** SUMMARY ***')

for summary_sentence in sentence_scores[:n]:

print(summary_sentence[1], '(score: %.2f)' % summary_sentence[0])

print('\n*** ORIGINAL ***')

print(paragraph)

print('\n*** SENTENCE SCORES ***')

for (score, sentence) in sentence_scores:

print(score, sentence)